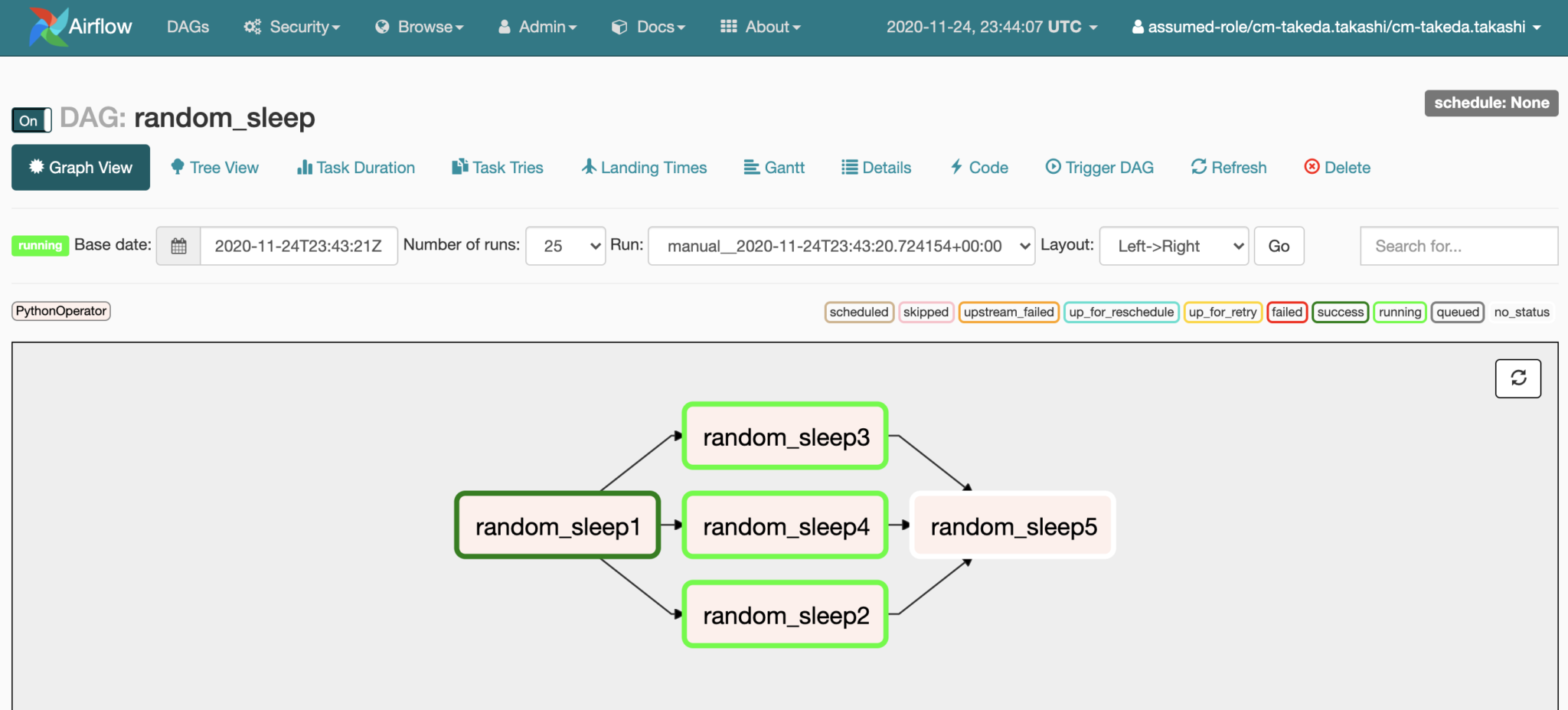

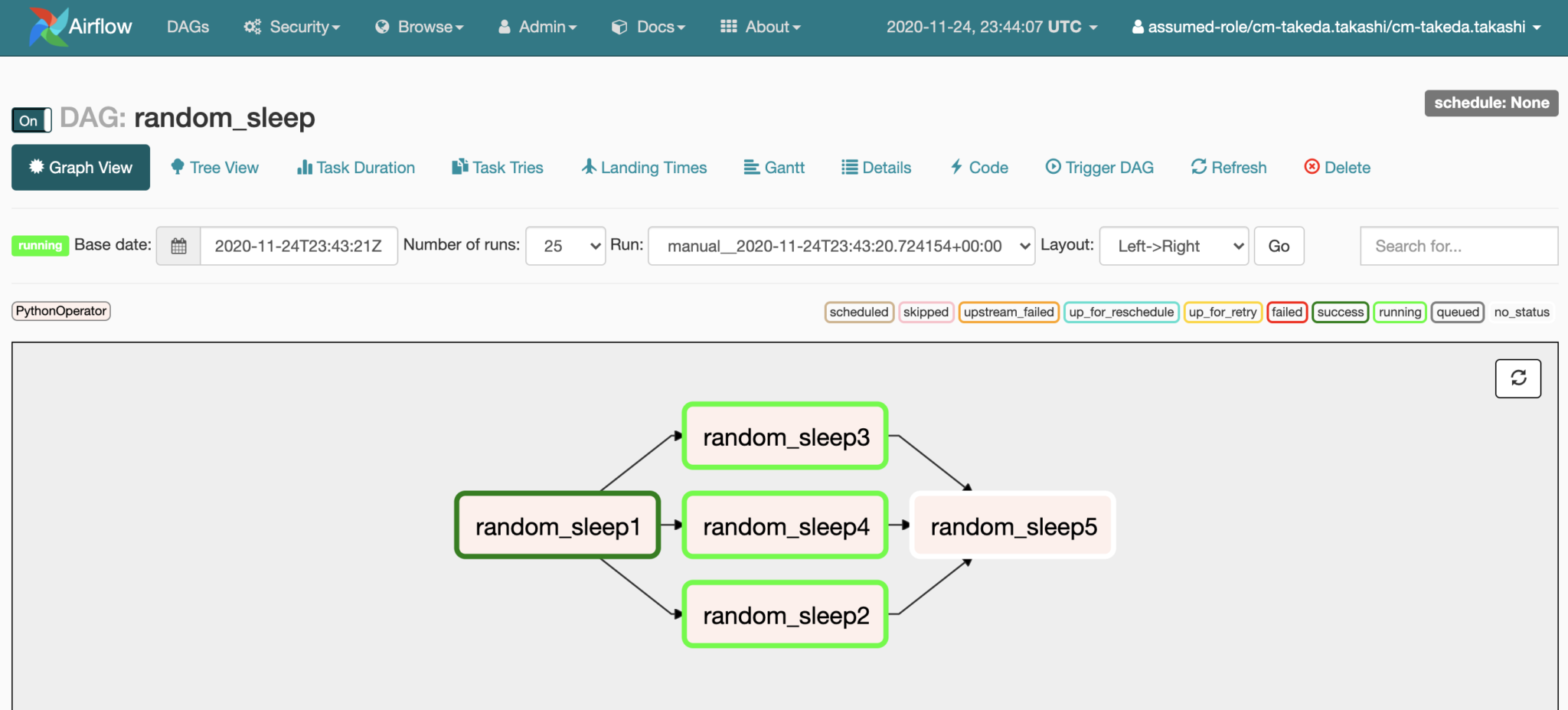

However, you might have Task C run whenever it can.Īs you can see, the DAG helps to describe how the workflow operates, yet, it doesn't actually do the operations themselves. For example, if our simple DAG has Task A, Task B, and Task C - you might configure your workflow so that Task A has to successfully complete before Task B can run.

You can have as many or as few DAGs as you desire.Ī simple DAG might only consist of a few tasks - and you are in charge of how you want these tasks executed. These DAGs are written in python, a fairly easy programming language to get started with, and helps you define the relationships and dependencies within your workflow. Your DAGs are a collection of all the tasks you want apache airflow to run on your behalf. A DAG helps you visually and programmatically manage and structure the processes within your workflows. Apache airflow is used by a multitude of large corporations, small businesses, and hobby developers.Įach workflow you build needs to be represented as a Directed Acyclic Graph, also known as a DAG. Your workflows can be as simple or as complex as you desire. A state machine has a set of parameters that it watches for and makes decisions based on those inputs. If you are unfamiliar with what a workflow is, you might think of it as a simple state machine.

And Creation of robust ETL pipelines that can extract data from multiple sources. You can use Apache airflow to help deal with: These workflows are commonly associated with the collection of data from complex data pipelines, however, there are many use cases around this technology. Having a managed workflow system such as this, will help eliminate these hands-on operations and gives your engineers, and data scientists, more time to focus on the tasks that they are good at.īefore we get started talking about Amazon-managed Workflows for Apache Airflow, I think we should level set a little and talk about just what Apache Airflow is.Īpache Airflow is an open-source platform built around the idea of programmatically authoring, automating, and scheduling workflow systems. Like any managed service, AWS is in charge of scaling, managing, and helping you to orchestrate your Apache airflow environments.Īmazon-managed workflows for apache airflow (MWAA) also deals with managing worker fleets, installing dependencies, scaling the system up and down, logging and monitoring, providing authorization through IAM, and even single sign-on. Basic knowledge of ELT pipelines and state machines would also be beneficial.ĭata scientists, Architects, and dev-ops engineers have been using Apache Airflows, a leading open-source orchestration environment, for creating and executing workflows that can help define ETL jobs, machine learning data pipelines, and even deal with complicated DevOps tasks.Īmazon-managed workflows for Apache Airflow is a managed service that was designed to help you integrate Apache Airflow straight into AWS with minimal setup and the quickest time to execution. You should also have some background knowledge about Apache Airflow, however, that is not a hard requirement. To get the most out of this course, you should have a decent understanding of cloud computing and cloud architectures, specifically with Amazon Web Services. This is a great service for anyone already using Apache Airflow, and wanting to find a better way to deal with setting up the service, scheduling, and managing their workflow.

Understand the key components required to set up your own Managed Airflow environment.Learn about DAGs (Directed Acyclic Graphs), which Apache Airflow uses to run your workflows.Understand how Amazon-managed workflows for Apache Airflow is implemented within AWS.

This course delves into Amazon-managed workflows for Apache Airflow (MWAA).

0 kommentar(er)

0 kommentar(er)